Year End Report

AI Adoption in 2025

Why Governance, Not Innovation, Became the Leadership Test

Part 3 of 4

In Part 2 of this series, we examined how prior authorization became the first visible accountability test of healthcare operations in 2025. As utilization rose, oversight expanded; and as documentation expectations tightened, organizations were increasingly required to explain not just what decisions were made, but how they were made.

Artificial intelligence is the next layer where that scrutiny is intensifying.

What many healthcare leaders expected to remain a controlled productivity enhancement instead became a core operational dependency. In 2025, AI moved decisively from experimentation to embedded infrastructure, influencing documentation, utilization patterns, clinical narratives, and administrative workflows. As a result, AI adoption became less about whether to deploy these tools and more about whether organizations could govern, explain, and defend their use.

This article explores how AI adoption actually unfolded in 2025, why governance emerged as the central challenge, and what the signals from this shift suggest for healthcare executives preparing for 2026.

This article is Part 3 of a four-part series designed to help payer and UM executives re-calibrate in preparation for 2026.

What Analysts Expected for 2025

Entering 2025, analysts and advisory firms largely converged on a measured outlook for healthcare AI:

- Continued expansion of narrow, task-specific tools such as documentation support, coding assistance, and imaging analysis

- Increased investment in ambient clinical documentation and administrative automation

- Slow but steady progress toward AI governance standards

- Limited regulatory intervention beyond high-level guidance

- Ongoing concerns related to bias, explainability, and integration with legacy systems

The prevailing assumption was that AI adoption would remain incremental and manageable, with governance frameworks evolving alongside deployment.

What this framing missed was how quickly AI tools would move from assistive to embedded. Many executives approved AI initiatives assuming limited downstream impact, only to discover that these tools were shaping workflows, utilization decisions, and documentation narratives at scale.

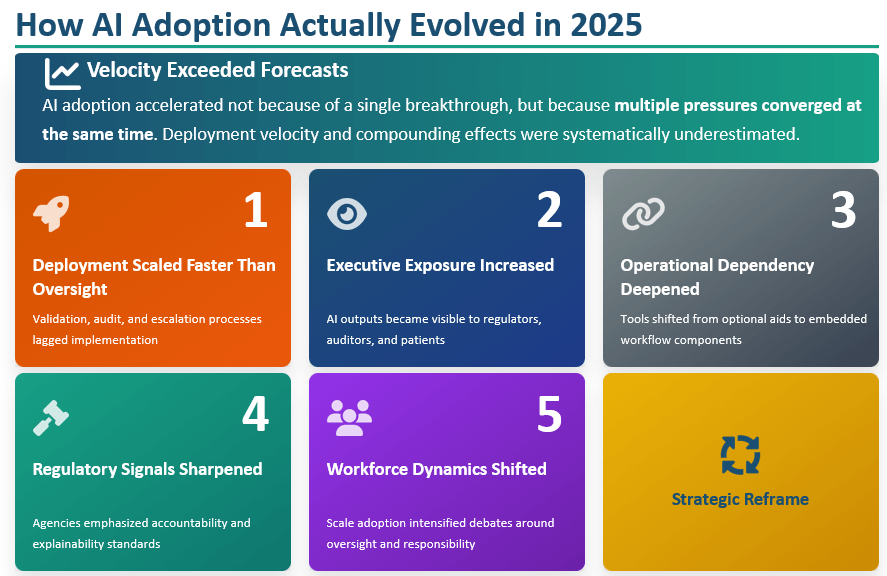

While most forecasts anticipated the direction of change, they underestimated its velocity and compounding effects. AI adoption accelerated not because of a single breakthrough, but because multiple pressures converged at the same time.

Operational Dependency Deepened

AI tools shifted from optional productivity aids to embedded components of daily workflows. This increased switching costs and introduced resilience concerns when systems failed, outputs drifted, or models behaved unpredictably.

Regulatory Signals Sharpened

Although comprehensive AI regulation remained fragmented, regulatory agencies increasingly emphasized accountability, transparency, and explainability, particularly when AI influenced clinical decisions or coverage determinations.

Workforce Dynamics Shifted

Clinicians and operational staff began relying on AI outputs at scale, intensifying debates around human oversight, responsibility, and error attribution.

Deployment Scaled Faster Than Oversight

Health systems and payers rapidly expanded AI use across clinical, operational, and financial workflows. In many cases, deployment outpaced the development of standardized validation, audit, and escalation processes.

Executive Exposure Increased

AI-assisted outputs influencing documentation, utilization management, and risk adjustment became more visible to regulators, auditors, clinicians, and patients. When outcomes were questioned, accountability often proved unclear.

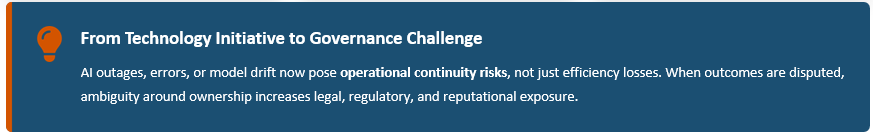

Together, these dynamics reframed AI from a technology initiative into a governance challenge. AI outages, errors, or model drift now pose operational continuity risks, not just efficiency losses. When outcomes are disputed, ambiguity around ownership increases legal, regulatory, and reputational exposure.

Why AI Governance Became the Inflection Point

AI now operates inside functions already under heightened scrutiny, including utilization management, appeals, documentation, and compliance. As a result, AI behavior is increasingly evaluated through the same lens as clinical and administrative decision-making.

In 2025, many organizations discovered that:

- AI-supported decisions were difficult to fully explain

- Documentation did not consistently reflect how AI outputs were used

- Oversight responsibilities were fragmented across teams

- Escalation paths were unclear when AI conflicted with human judgment

Governance gaps that might have been tolerated during pilot phases became harder to defend once AI influenced core operations.

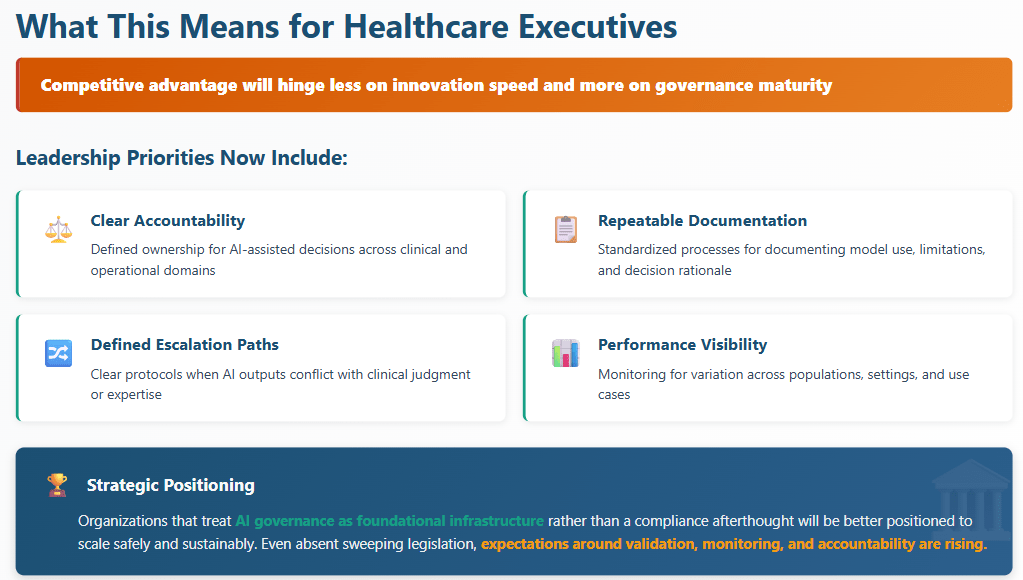

Why Governance Will Define AI Success

Top Signals for 2026

The forces shaping AI adoption in 2025 point to a more consequential phase ahead. For healthcare executives, several signals stand out as particularly relevant for 2026.

From Adoption Metrics to Accountability Standards

In 2026, success will be measured less by how widely AI is deployed and more by how clearly its role can be explained and defended.

Expect increased focus on:

- Documented decision logic and model intent

- Scrutiny of AI-assisted clinical and administrative outcomes

- Formalized expectations for human-in-the-loop oversight

- Demonstrated consistency across populations and use cases

Organizations that cannot articulate how AI influences decisions may face heightened regulatory and reputational risk.

Convergence of Oversight Across Domains

AI governance will no longer be siloed within IT or innovation teams. Oversight is increasingly converging across:

- Compliance and regulatory affairs

- Clinical quality and safety

- Risk adjustment, utilization management, and revenue integrity

- Data privacy and security

This convergence forces leadership teams to reconcile competing priorities such as speed versus control and automation versus accountability within a unified governance framework.

Movement Toward Standardized Evaluation

While no single national AI framework has emerged, signals point toward:

- More standardized evaluation criteria for performance and bias

- Increased use of structured data to audit AI-supported decisions

- Broader adoption of third-party validation and monitoring tools

These developments mirror earlier shifts in utilization management and quality reporting, where informal practices eventually gave way to standardized oversight. AI is increasingly being treated as an enterprise risk function rather than a discretionary technology asset.

What Happens Next

The trajectory for healthcare AI is becoming clearer. Adoption will continue. Oversight will intensify. Expectations for transparency will rise.

In 2026, the defining question for executives will not be whether AI delivers value, but whether their organization can demonstrate that it does so responsibly and consistently.

Investments made now in governance, documentation, and cross-functional accountability will shape how confidently organizations navigate the next phase of AI-enabled healthcare. Those that do not may find that the true cost of AI emerges not at deployment, but at accountability.

The final article in this series will examine how transparency, efficiency, and accountability converge to make these decisions externally visible, and why credibility has become a defining leadership asset.

Frequently Asked Questions (FAQs)

Why did AI governance become a major issue in healthcare during 2025?

AI governance became a major issue in 2025 because AI tools moved rapidly from limited pilots to embedded use across clinical and administrative workflows. As AI began influencing documentation, utilization management, and coverage decisions, organizations faced increased scrutiny around accountability, explainability, and oversight that governance frameworks had not fully anticipated.

What risks does AI adoption pose for payer and utilization management organizations?

AI adoption introduces risks related to decision accountability, documentation consistency, and audit defensibility. When AI outputs influence utilization or administrative decisions, unclear ownership, insufficient oversight, or inconsistent documentation can increase regulatory, legal, and reputational exposure.

What should healthcare executives focus on for AI governance in 2026?

In 2026, healthcare executives should focus on governance maturity rather than adoption volume. Priorities include defining accountability for AI-assisted decisions, documenting how AI tools are used and limited, establishing human-in-the-loop oversight, and ensuring consistency across populations, workflows, and oversight bodies.

Why Choose BHM?

We proudly stand behind our reputation in the industry as experts in utilization management.

Our proven process has served our clients well for over 20 years and our unwavering focus on serving clients with excellence has set us apart.

Here are a few ways we demonstrate that commitment.

Trust

Reliable Partner

We’ve built our reputation on integrity, transparency, and delivering dependable services that organizations trust to support critical decisions in cost effective care.

Expertise

Industry Knowledge

Our team of seasoned professionals and clinical experts provide unparalleled insights and guidance tailored to meet the complex needs of healthcare payers and providers.

Innovation

Driving Progress

We combine advanced technologies and forward-thinking strategies to deliver efficient, scalable solutions that enhance outcomes and streamline processes.

Outcomes

Measurable Impact

Our systems are designed to employ data-driven insights to help organizations improve quality, reduce costs, and meet compliance standards for lasting success.

Partner with BHM Healthcare Solutions

With over 20 years in the industry, BHM Healthcare Solutions is committed to providing consulting and review services that help streamline clinical, financial, and operational processes to improve care delivery and organizational performance.

We bring the expertise, strategy, and capacity that healthcare organizations need to navigate today’s challenges – so they can focus on helping others.

Are you ready to make the shift to a more effective process?