Industry Watch Alert

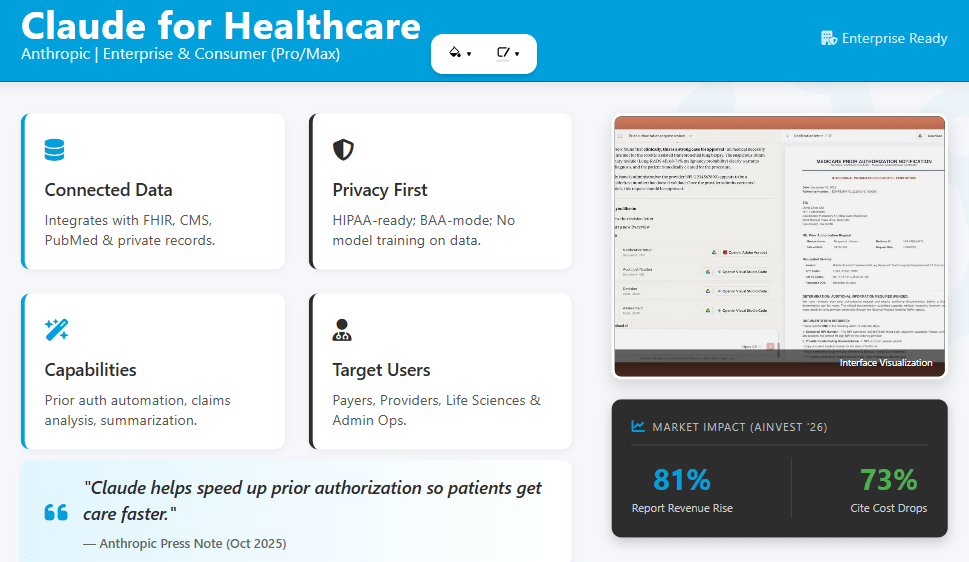

In the week following OpenAI’s introduction of ChatGPT Health, Anthropic announced Claude for Healthcare, a set of tools that connects directly to coverage policies, coding systems, provider registries, and scientific literature.

OpenAI introduced OpenAI for Healthcare, an enterprise offering built around ChatGPT for Healthcare and its API.

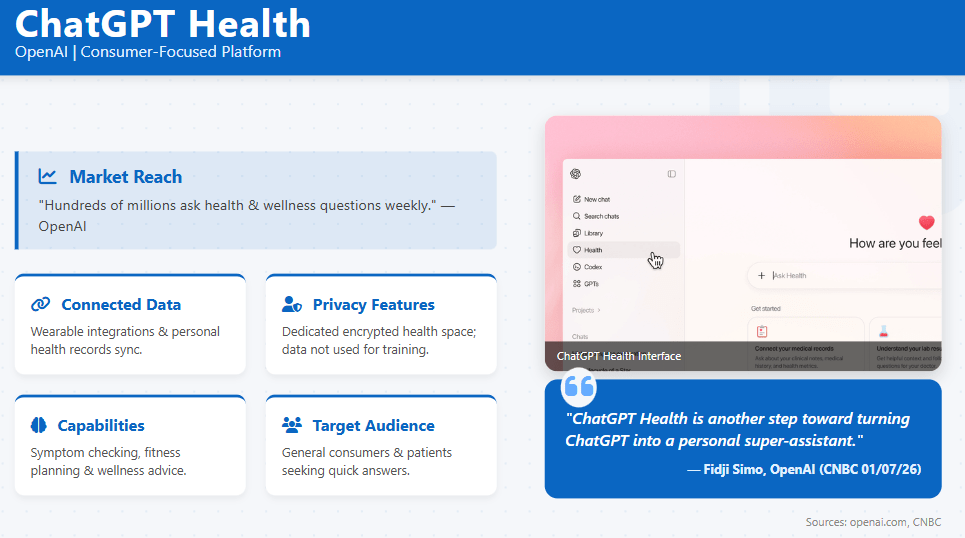

BHM’s prior Industry Watch Alert on January 7, 2026 summarized ChatGPT Health as a consumer-focused, privacy-enhanced environment for health data.

This alert builds on that foundation and compares Anthropic’s Claude for Healthcare with both ChatGPT Health and OpenAI for Healthcare where the official sources support direct comparison.

These announcements are commercial product developments rather than regulatory changes. However, they intersect directly with payer operations, utilization management workflows, and PHI governance. Prior BHM coverage focused on ChatGPT Health as a consumer environment.

The new information from Anthropic and OpenAI extends the picture to include enterprise-grade tools that sit at the intersection of coverage policy, clinical documentation, and member data.

[explore] The Impact

Comparative positioning of Anthropic and OpenAI health offerings

Based on the official announcements, the current health offerings can be summarized as follows:

Anthropic: Claude for Healthcare and Claude for Life Sciences

- Claude for Healthcare is described as a HIPAA-ready offering for providers, payers, and consumers.

- It introduces connectors to:

- CMS Coverage Database, including Local and National Coverage Determinations

- ICD-10 diagnosis and procedure codes, sourced from CMS and CDC

- National Provider Identifier (NPI) Registry

- PubMed and other biomedical literature sources

- It includes sample Agent Skills for:

- FHIR development

- Prior authorization review that cross-references coverage requirements, clinical guidelines, patient records, and appeal documents

OpenAI: ChatGPT Health and OpenAI for Healthcare

- ChatGPT Health, as covered in last week’s BHM alert, is:

- A consumer-facing, health-focused space within ChatGPT where U.S. users can connect medical records via b.well and link wellness apps such as Apple Health and other partners.

- Designed to interpret results, identify patterns, and prepare for clinical visits, with conversations stored in an encrypted, segregated environment that is not used to train foundation models.

- OpenAI for Healthcare includes:

- ChatGPT for Healthcare, described as a secure product for clinicians, administrators, and researchers, evaluated with physician-led benchmarks such as HealthBench and GDPval.

- An API offering that can be used under a Business Associate Agreement to support HIPAA compliance requirements for eligible customers.

- ChatGPT Health, as covered in last week’s BHM alert, is:

From a payer and UM perspective, Claude for Healthcare and ChatGPT for Healthcare both position AI as an overlay across clinical, administrative, and evidence workflows, while ChatGPT Health remains focused on consumer-directed health navigation.

Prior authorization, coverage policy, and claims workflows

Anthropic and OpenAI both describe capabilities tied to prior authorization, coverage criteria, and documentation. The framing in the official materials provides a factual basis for comparison:

Anthropic (Claude for Healthcare)

- Describes a sample prior authorization review skill that can:

- Pull coverage requirements from the CMS Coverage Database or custom policies.

- Check clinical criteria against patient records in a HIPAA-ready manner.

- Propose a determination with supporting materials for payer review.

- Describes support for:

- Building stronger claims appeals by combining coverage policies, clinical guidelines, and patient records.

- Coordinating care and triaging patient portal messages.

- Describes a sample prior authorization review skill that can:

OpenAI (ChatGPT for Healthcare and ChatGPT Health)

- ChatGPT for Healthcare is described as providing reusable templates for:

- Prior authorization support

- Discharge summaries

- Patient instructions

- Clinical letters

- ChatGPT Health is positioned for consumers to:

- Interpret test results

- Prepare for clinical appointments

- Consider insurance tradeoffs and care options based on their own health patterns.

- ChatGPT for Healthcare is described as providing reusable templates for:

For payers, utilization management leaders, and delegated entities, these statements indicate that both companies are targeting prior authorization and coverage-aligned documentation as primary use cases.

Oversight programs will likely need to account for:

- How coverage rules, internal medical policies, and local criteria are represented and updated in external AI platforms.

- How AI-generated draft determinations, rationales, and appeals content are incorporated into UM workflows that remain subject to regulatory and accreditation requirements.

- What documentation and logging are available for audits, independent review organizations, or litigation when AI tools contribute to the analysis underlying a determination.

Data connectors, PHI pathways, and third-party intermediaries

Both Anthropic and OpenAI describe extensive connectivity between their AI systems and external data sources, although the specific connectors and intermediaries differ.

Payer and policy data connections

- Anthropic explicitly connects Claude to:

- CMS Coverage Database

- ICD-10 codes

- NPI Registry

- OpenAI for Healthcare emphasizes integration with institutional policies, care pathways, and enterprise tools such as Microsoft SharePoint, rather than specific public policy databases, and uses retrieval techniques to ground responses in guidelines and evidence.

- Anthropic explicitly connects Claude to:

Consumer health data connections

- ChatGPT Health (prior BHM coverage) allows users to connect:

- Medical records through b.well

- Multiple wellness and lifestyle apps, with granular, app-level permissions and opt-in controls.

- Claude for Healthcare’s consumer-facing capabilities, according to Anthropic:

- Allow U.S. Claude Pro and Max subscribers to connect health records via HealthEx and Function, and to integrate Apple Health and Android Health Connect for fitness and wellness data.

- Provide opt-in controls, the ability to restrict what data is shared, and the option to revoke access and edit permissions.

- ChatGPT Health (prior BHM coverage) allows users to connect:

These data flows indicate several relevant facts for payer and UM oversight:

- Payer-originated claims, clinical data, and coverage information can be accessed in multiple ways: directly via enterprise deployments and indirectly via consumer intermediaries such as b.well, HealthEx, and wellness apps.

- Both Anthropic and OpenAI describe contractual and technical controls aimed at HIPAA-supportive deployments, but consumer use that members initiate individually may fall under different legal regimes than covered entity workflows.

- The same coverage and claims information may appear in member appeals, grievances, or external reviews as AI-generated summaries, screenshots, or narrative explanations produced outside payer systems.

Privacy, security, and model training policies

The official announcements outline how Anthropic and OpenAI describe the handling of health data:

Anthropic

- States that Claude for Healthcare is available in HIPAA-ready configurations for covered entities and business associates.

- Indicates that users can choose which data to share, must explicitly opt in to connectors such as HealthEx and Function, and can disconnect or adjust permissions.

- States that Anthropic does not use users’ health data to train its models.

OpenAI

- For ChatGPT Health, states that health conversations, connected apps, and files are stored in an isolated environment with purpose-built encryption and are not used to train foundation models.

- For ChatGPT for Healthcare and the OpenAI API in OpenAI for Healthcare, states that:

- Patient data and PHI remain under the organization’s control.

- A Business Associate Agreement is available for eligible customers.

- Options include data residency, audit logs, and customer-managed encryption keys.

- Content shared with these healthcare products is not used to train models.

From an oversight standpoint, these statements provide a factual baseline for:

- Documenting vendor positions on data segregation, PHI use, and model training.

- Comparing technical safeguards such as encryption, isolation, and logging across vendors that support UM, prior authorization, or claims workflows.

- Framing discussions with internal privacy, security, and legal teams about how member data flows into AI tools under both enterprise and consumer configurations.

Clinical evaluation, benchmarks, and quality controls

Both Anthropic and OpenAI describe clinical evaluation frameworks for their health offerings:

Anthropic

- Reports that Claude Opus 4.5, the model underlying Claude for Healthcare, is evaluated on medical and life sciences benchmarks, including MedCalc and MedAgentBench, and on internal honesty evaluations related to factual hallucinations.

- Highlights Opus 4.5 performance on agentic simulations of medical tasks and life sciences scenarios, and references external evaluations such as LatchBio’s SpatialBench for spatial biology.

OpenAI

- For ChatGPT Health (prior BHM coverage), reports:

- Input from more than 260 physicians across 60 countries, incorporated into model behavior.

- Use of HealthBench, a physician-developed framework that evaluates safety, clarity, and escalation practices.

- For OpenAI for Healthcare, reports:

- Use of GPT-5 and GPT-5.2 models evaluated across HealthBench and GDPval.

- Multi-round physician-led red teaming and evaluation of real-world workflows.

- For ChatGPT Health (prior BHM coverage), reports:

These statements indicate that both companies have documented, benchmark-based approaches to evaluating medical performance. For payers and UM organizations, this may be relevant when aligning vendor evaluation practices with internal expectations for clinical quality, documentation standards, and communication of uncertainty.

Summary

Within one week, OpenAI and Anthropic both introduced health-focused AI offerings that link directly to clinical data, payer coverage information, and consumer health records. BHM’s prior alert described ChatGPT Health as a consumer-focused, encrypted environment.

Anthropic’s Claude for Healthcare extends this landscape by:

- Connecting Claude to CMS coverage policies, ICD-10 codes, and NPI data.

- Providing sample skills for prior authorization review and FHIR development.

- Allowing U.S. users to connect health records and wellness data via HealthEx, Function, and mobile health platforms, with explicit opt-in controls.

OpenAI’s OpenAI for Healthcare complements ChatGPT Health by:

- Introducing ChatGPT for Healthcare as an enterprise product evaluated with physician-led benchmarks.

- Offering API-based deployments with BAA availability and health-specific governance features.

While these developments do not alter regulatory requirements, they suggest that core payer and UM functions could operate in environments where third-party AI systems have direct access to coverage rules, coding frameworks, clinical literature, and member-level data. As a result, clinical oversight, vendor governance, configuration management, and auditability of AI-assisted determinations remain central considerations for payer and utilization management leaders.

As AI platforms accelerate across healthcare, clinical oversight grounded in both technological fluency and real-world utilization management experience becomes the differentiator. This is where BHM Healthcare Solutions brings decades of UM expertise, helping organizations apply innovation responsibly while maintaining rigorous clinical integrity.

Previous Alerts

Sources

Each week, we email a summary along with links to our newest articles and resources. From time to time, we also send urgent news updates with important, time-sensitive details.

Please fill out the form to subscribe.

Note: We do not share our email subscriber information and you can unsubscribe at any time.

|

|

Thank you for Signing Up |

Partner with BHM Healthcare Solutions

BHM Healthcare Solutions offers expert consulting services to guide your organization through price transparency & other regulatory complexities for optimal operational efficiency. We leverage over 20 years of experience helping payers navigate evolving prior authorization requirements with efficiency, accuracy, and transparency.

Our proven processes reduce administrative errors, accelerate turnaround times, and strengthen provider relationships, while advanced reporting and analytics support compliance readiness and audit preparation. From operational improvements to strategic positioning, we partner with organizations to turn regulatory change into an opportunity for clinical and business excellence.